Block Manager

Abstract

The block manager is a key component of full nodes and is responsible for block production or block syncing depending on the node type: sequencer or non-sequencer. Block syncing in this context includes retrieving the published blocks from the network (P2P network or DA network), validating them to raise fraud proofs upon validation failure, updating the state, and storing the validated blocks. A full node invokes multiple block manager functionalities in parallel, such as:

- Block Production (only for sequencer full nodes)

- Block Publication to DA network

- Block Retrieval from DA network

- Block Sync Service

- Block Publication to P2P network

- Block Retrieval from P2P network

- State Update after Block Retrieval

sequenceDiagram

title Overview of Block Manager

participant User

participant Sequencer

participant Full Node 1

participant Full Node 2

participant DA Layer

User->>Sequencer: Send Tx

Sequencer->>Sequencer: Generate Block

Sequencer->>DA Layer: Publish Block

Sequencer->>Full Node 1: Gossip Block

Sequencer->>Full Node 2: Gossip Block

Full Node 1->>Full Node 1: Verify Block

Full Node 1->>Full Node 2: Gossip Block

Full Node 1->>Full Node 1: Mark Block Soft Confirmed

Full Node 2->>Full Node 2: Verify Block

Full Node 2->>Full Node 2: Mark Block Soft Confirmed

DA Layer->>Full Node 1: Retrieve Block

Full Node 1->>Full Node 1: Mark Block DA Included

DA Layer->>Full Node 2: Retrieve Block

Full Node 2->>Full Node 2: Mark Block DA Included

Protocol/Component Description

The block manager is initialized using several parameters as defined below:

| Name | Type | Description |

|---|---|---|

| signing key | crypto.PrivKey | used for signing a block after it is created |

| config | config.BlockManagerConfig | block manager configurations (see config options below) |

| genesis | *cmtypes.GenesisDoc | initialize the block manager with genesis state (genesis configuration defined in config/genesis.json file under the app directory) |

| store | store.Store | local datastore for storing rollup blocks and states (default local store path is $db_dir/rollkit and db_dir specified in the config.yaml file under the app directory) |

| mempool, proxyapp, eventbus | mempool.Mempool, proxy.AppConnConsensus, *cmtypes.EventBus | for initializing the executor (state transition function). mempool is also used in the manager to check for availability of transactions for lazy block production |

| dalc | da.DAClient | the data availability light client used to submit and retrieve blocks to DA network |

| blockstore | *goheaderstore.Store[*types.Block] | to retrieve blocks gossiped over the P2P network |

Block manager configuration options:

| Name | Type | Description |

|---|---|---|

| BlockTime | time.Duration | time interval used for block production and block retrieval from block store (defaultBlockTime) |

| DABlockTime | time.Duration | time interval used for both block publication to DA network and block retrieval from DA network (defaultDABlockTime) |

| DAStartHeight | uint64 | block retrieval from DA network starts from this height |

| LazyBlockInterval | time.Duration | time interval used for block production in lazy aggregator mode even when there are no transactions (defaultLazyBlockTime) |

| LazyMode | bool | when set to true, enables lazy aggregation mode which produces blocks only when transactions are available or at LazyBlockInterval intervals |

Block Production

When the full node is operating as a sequencer (aka aggregator), the block manager runs the block production logic. There are two modes of block production, which can be specified in the block manager configurations: normal and lazy.

In normal mode, the block manager runs a timer, which is set to the BlockTime configuration parameter, and continuously produces blocks at BlockTime intervals.

In lazy mode, the block manager implements a dual timer mechanism:

- A

blockTimerthat triggers block production at regular intervals when transactions are available - A

lazyTimerthat ensures blocks are produced atLazyBlockIntervalintervals even during periods of inactivity

The block manager starts building a block when any transaction becomes available in the mempool via a notification channel (txNotifyCh). When the Reaper detects new transactions, it calls Manager.NotifyNewTransactions(), which performs a non-blocking signal on this channel. The block manager also produces empty blocks at regular intervals to maintain consistency with the DA layer, ensuring a 1:1 mapping between DA layer blocks and execution layer blocks.

Building the Block

The block manager of the sequencer nodes performs the following steps to produce a block:

- Call

CreateBlockusing executor - Sign the block using

signing keyto generate commitment - Call

ApplyBlockusing executor to generate an updated state - Save the block, validators, and updated state to local store

- Add the newly generated block to

pendingBlocksqueue - Publish the newly generated block to channels to notify other components of the sequencer node (such as block and header gossip)

Block Publication to DA Network

The block manager of the sequencer full nodes regularly publishes the produced blocks (that are pending in the pendingBlocks queue) to the DA network using the DABlockTime configuration parameter defined in the block manager config. In the event of failure to publish the block to the DA network, the manager will perform maxSubmitAttempts attempts and an exponential backoff interval between the attempts. The exponential backoff interval starts off at initialBackoff and it doubles in the next attempt and capped at DABlockTime. A successful publish event leads to the emptying of pendingBlocks queue and a failure event leads to proper error reporting without emptying of pendingBlocks queue.

Block Retrieval from DA Network

The block manager of the full nodes regularly pulls blocks from the DA network at DABlockTime intervals and starts off with a DA height read from the last state stored in the local store or DAStartHeight configuration parameter, whichever is the latest. The block manager also actively maintains and increments the daHeight counter after every DA pull. The pull happens by making the RetrieveBlocks(daHeight) request using the Data Availability Light Client (DALC) retriever, which can return either Success, NotFound, or Error. In the event of an error, a retry logic kicks in after a delay of 100 milliseconds delay between every retry and after 10 retries, an error is logged and the daHeight counter is not incremented, which basically results in the intentional stalling of the block retrieval logic. In the block NotFound scenario, there is no error as it is acceptable to have no rollup block at every DA height. The retrieval successfully increments the daHeight counter in this case. Finally, for the Success scenario, first, blocks that are successfully retrieved are marked as DA included and are sent to be applied (or state update). A successful state update triggers fresh DA and block store pulls without respecting the DABlockTime and BlockTime intervals. For more details on DA integration, see the Data Availability specification.

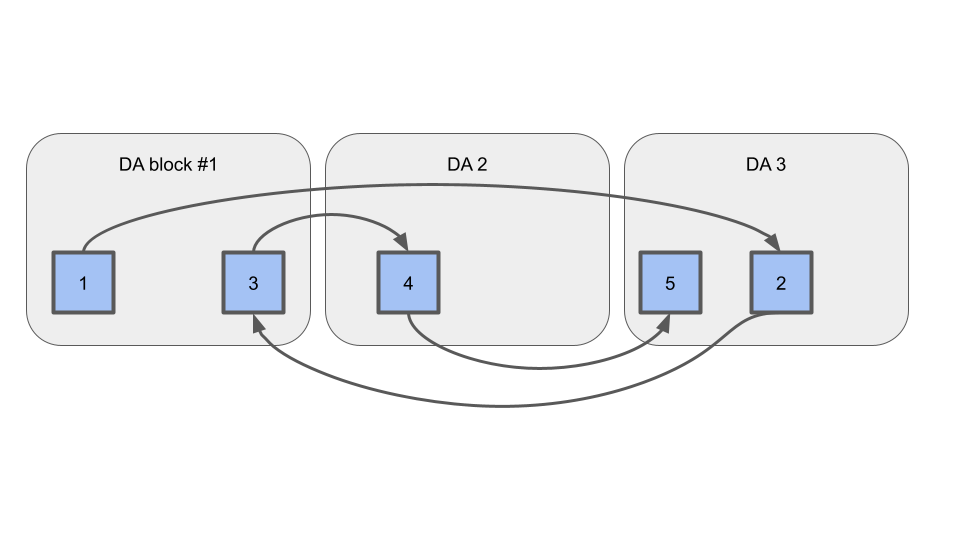

Out-of-Order Rollup Blocks on DA

Rollkit should support blocks arriving out-of-order on DA, like so:

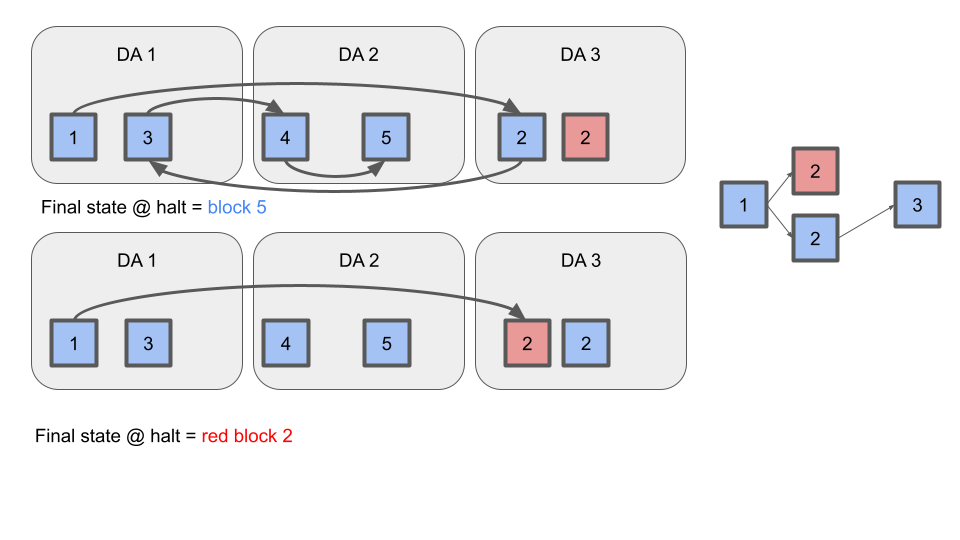

Termination Condition

If the sequencer double-signs two blocks at the same height, evidence of the fault should be posted to DA. Rollkit full nodes should process the longest valid chain up to the height of the fault evidence, and terminate. See diagram:

Block Sync Service

The block sync service is created during full node initialization. After that, during the block manager's initialization, a pointer to the block store inside the block sync service is passed to it. Blocks created in the block manager are then passed to the BlockCh channel and then sent to the go-header service to be gossiped blocks over the P2P network.

Block Publication to P2P network

Blocks created by the sequencer that are ready to be published to the P2P network are sent to the BlockCh channel in Block Manager inside publishLoop.

The blockPublishLoop in the full node continuously listens for new blocks from the BlockCh channel and when a new block is received, it is written to the block store and broadcasted to the network using the block sync service.

Among non-sequencer full nodes, all the block gossiping is handled by the block sync service, and they do not need to publish blocks to the P2P network using any of the block manager components.

Block Retrieval from P2P network

For non-sequencer full nodes, blocks gossiped through the P2P network are retrieved from the Block Store in BlockStoreRetrieveLoop in Block Manager.

Starting off with a block store height of zero, for every blockTime unit of time, a signal is sent to the blockStoreCh channel in the block manager and when this signal is received, the BlockStoreRetrieveLoop retrieves blocks from the block store.

It keeps track of the last retrieved block's height and every time the current block store's height is greater than the last retrieved block's height, it retrieves all blocks from the block store that are between these two heights.

For each retrieved block, it sends a new block event to the blockInCh channel which is the same channel in which blocks retrieved from the DA layer are sent.

This block is marked as soft confirmed by the validating full node until the same block data and the corresponding header is seen on the DA layer, then it is marked DA-included.

About Soft Confirmations and DA Inclusions

The block manager retrieves blocks from both the P2P network and the underlying DA network because the blocks are available in the P2P network faster and DA retrieval is slower (e.g., 1 second vs 6 seconds).

The blocks retrieved from the P2P network are only marked as soft confirmed until the DA retrieval succeeds on those blocks and they are marked DA-included.

DA-included blocks are considered to have a higher level of finality.

DAIncluderLoop:

A new loop, DAIncluderLoop, is responsible for advancing the DAIncludedHeight by checking if blocks after the current height have both their header and data marked as DA-included in the caches.

If either the header or data is missing, the loop stops advancing.

This ensures that only blocks with both header and data present are considered DA-included.

State Update after Block Retrieval

The block manager stores and applies the block to update its state every time a new block is retrieved either via the P2P or DA network. State update involves:

ApplyBlockusing executor: validates the block, executes the block (applies the transactions), captures the validator updates, and creates an updated state.Commitusing executor: commit the execution and changes, update mempool, and publish events- Store the block, the validators, and the updated state.

Message Structure/Communication Format

The communication between the block manager and executor:

InitChain: initializes the chain state with the given genesis time, initial height, and chain ID usingInitChainSyncon the executor to obtain initialappHashand initialize the state.CreateBlock: prepare a block by polling transactions from mempool.ApplyBlock: validate the block, execute the block (apply transactions), validator updates, create and return updated state.SetFinal: sets the block as final when it's corresponding header and data are seen on the dA layer.

Assumptions and Considerations

- The block manager loads the initial state from the local store and uses genesis if not found in the local store, when the node (re)starts.

- The default mode for sequencer nodes is normal (not lazy).

- The sequencer can produce empty blocks.

- In lazy aggregation mode, the block manager maintains consistency with the DA layer by producing empty blocks at regular intervals, ensuring a 1:1 mapping between DA layer blocks and execution layer blocks.

- The lazy aggregation mechanism uses a dual timer approach:

- A

blockTimerthat triggers block production when transactions are available - A

lazyTimerthat ensures blocks are produced even during periods of inactivity

- A

- Empty batches are handled differently in lazy mode - instead of discarding them, they are returned with the

ErrNoBatcherror, allowing the caller to create empty blocks with proper timestamps. - Transaction notifications from the

Reaperto theManagerare handled via a non-blocking notification channel (txNotifyCh) to prevent backpressure. - The block manager uses persistent storage (disk) when the

root_diranddb_pathconfiguration parameters are specified inconfig.yamlfile under the app directory. If these configuration parameters are not specified, the in-memory storage is used, which will not be persistent if the node stops. - The block manager does not re-apply the block again (in other words, create a new updated state and persist it) when a block was initially applied using P2P block sync, but later was DA included during DA retrieval. The block is only marked DA included in this case.

- The data sync store is created by prefixing

dataSyncon the main data store. - The genesis

ChainIDis used to create thePubSubTopIDin go-header with the string-blockappended to it. This append is because the full node also has a P2P header sync running with a different P2P network. Refer to go-header specs for more details. - Block sync over the P2P network works only when a full node is connected to the P2P network by specifying the initial seeds to connect to via

P2PConfig.Seedsconfiguration parameter when starting the full node. - Node's context is passed down to all the components of the P2P block sync to control shutting down the service either abruptly (in case of failure) or gracefully (during successful scenarios).

- The block manager supports the separation of header and data structures in Rollkit. This allows for expanding the sequencing scheme beyond single sequencing and enables the use of a decentralized sequencer mode. For detailed information on this architecture, see the Header and Data Separation ADR.

- The block manager processes blocks with a minimal header format, which is designed to eliminate dependency on CometBFT's header format and can be used to produce an execution layer tailored header if needed. For details on this header structure, see the Rollkit Minimal Header specification.

Implementation

See block-manager

See tutorial for running a multi-node network with both sequencer and non-sequencer full nodes.

References

[1] Go Header

[2] Block Sync

[3] Full Node

[4] Block Manager

[5] Tutorial